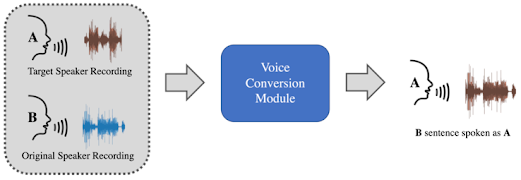

The threat actors are using Artificial Intelligence (AI) and Machine Learning (ML) voice cloning tools to disperse misinformation for cybercriminal scams.

It doesn’t take much for an audio recording of a voice – only about 10 to 20 seconds – to make a decent reproduction. The audio clip extracts unique details of the victim’s voice. A threat actor can simply call a victim and pretend to be a salesperson, for example, to capture enough of the audio to make it work. more

Here are some actual deepfake audio recordings – some humorous, some cool, but all that in some form can be used maliciously:

• CNN reporter calls his parents using a deepfake voice. (CNN)

• No, Tom Cruise isn’t on TikTok. It’s a deepfake. (CNN)

• Twenty of the best deepfake examples that terrified and amused the internet. (Creative Bloq)

• CNN reporter calls his parents using a deepfake voice. (CNN)

• No, Tom Cruise isn’t on TikTok. It’s a deepfake. (CNN)

• Twenty of the best deepfake examples that terrified and amused the internet. (Creative Bloq)