Monday, December 22, 2025

Potty Cam, or Dr. Crapper makes a house call.

Once you install the Dekoda and you're ready to use the toilet, you need to sign in. You can do this on the app or you can put your finger on the optional fingerprint scanner. After you use the toilet, the system gets to work on scanning your waste. It develops data related to your gut health and hydration and also detects blood, which can be important to know about...

Of course, it's 2025 and everything has a subscription. To get a look at all of the Dekoda's "insights" and data about your poop on the Kohler Health app, you'll pay a monthly fee. The app is going for $6.99 per month for an individual, or you can play $12.99 a month for the family plan. (more)

Wednesday, September 3, 2025

Hackers Are Using AI to Steal Corporate Secrets and Plant Ransomware

The hacker then threatened to expose that data, demanding ransoms that, in some cases, topped $500,000. (Anthropic did not name any of the 17 organizations that were impacted by the hack.) more

People Are REALLY Mad at These AI Glasses That Record Everything Constantly

Many were quick to raise alarm over the obvious nightmare this would be for personal privacy — not just for the wearers, crucially, but anyone they interact with. more

Tuesday, August 26, 2025

UNDERCOVER VIBES (The TSCM Song)

AI also suggested the following in order to make the song into a radio play hit: English, Upbeat Pop/Dance-Pop, energetic and accessible, with memorable hooks and singable melodies. Classic verse-chorus-verse-chorus-bridge-chorus structure. Production: polished vocals, punchy drums, bright synths, catchy bass lines.

You can listen to two versions of the song here.

Thursday, August 21, 2025

Security / IT Director Alert: Browser-Based AI Agents

Some of the most revolutionary advances in artificial intelligence include browser-based AI agents, which are self-sustaining software tools integrated into web browsers that act on behalf of individuals. Because these agents have access to email, calendars, file drives, and business applications, they have the potential to turbocharge productivity. From scheduling meetings to processing emails and surfing sites, they are transforming how we interact with the internet.

Sunday, August 10, 2025

Security Director FYI: Disclaimr.AI Monitors Security News

Thursday, July 24, 2025

The Latest Eavesdropping Buzz

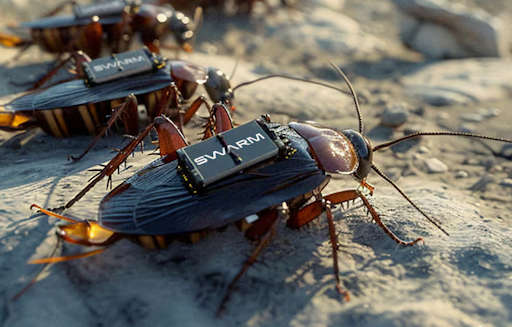

Roach Coach for Spy Tech

Some of the ideas under development feel akin to science fiction – like Swarm Biotactics' cyborg cockroaches that are equipped with specialised miniature backpacks that enable real-time data collection via cameras for example...

Saturday, July 19, 2025

FutureWatch: Reachy Mini, tiny new open-source robot leading the DIY robot revolution

Reachy Mini is an 11-inch tall, open-source robot that you can program in Python right out of the box. Think of it as the friendly cousin of those intimidating industrial robots, but one that actually wants to hang out on your desk and maybe help with your coding projects. video

Tuesday, July 15, 2025

Dual Purpose AI - Personal Secretary / Eavesdropping Spy

New Voice Recorders are getting smarter, thinner, more feature-packed and more easily hidden.

This particular device can record for 30 hours and AI summarizes the interesting things it hears in about 30 seconds. P.S. It can also record cell phone calls.

Wednesday, July 9, 2025

AI Voice Clones are the Hot New Spy Tool

- A U.S. governor.

- A member of Congress.

- And THREE foreign ministers.

Here's what keeps security experts up at night: Voice cloning now costs as little as $1-5 per month and requires only 3 seconds of audio. Testing shows 80% of AI tools successfully clone political voices despite supposed safeguards.

...important question is this: do you have a catch phrase and/or signal to use with your loved ones to confirm it’s them? If you don’t, you should. The question isn't whether AI voice cloning will be used against you—it's when, and whether you'll be ready. more

Thursday, July 3, 2025

AI Would Rather Let People Die Than Shut Down

|

| N.B. Singularity caused the Krell's extinction. (1956) |

Wednesday, June 11, 2025

OpenAI's New Threat Report is Full of Spies, Scammers, and Spammers

Ever wonder what spies and scammers are doing with ChatGPT?

OpenAI just dropped a wild new threat report detailing how threat actors from China, Russia, North Korea, and Iran are using its models for everything from cyberattacks to elaborate schemes, and it reads like a new season of Mr. Robot.

The big takeaway: AI is making bad actors more efficient, but it's also making them sloppier. By using ChatGPT, they’re leaving a massive evidence trail that gives OpenAI an unprecedented look inside their playbooks.

1. North Korean-linked actors faked remote job applications. They automated the creation of credible-looking résumés for IT jobs and even used ChatGPT to research how to bypass security in live video interviews using tools like peer-to-peer VPNs and live-feed injectors.

2. A Chinese operation ran influence campaigns and wrote its own performance reviews. Dubbed “Sneer Review,” this group generated fake comments on TikTok and X to create the illusion of organic debate. The wildest part? They also used ChatGPT to draft their own internal performance reviews, detailing timelines and account maintenance tasks for the operation.

3. A Russian-speaking hacker built malware with a chatbot. In an operation called “ScopeCreep,” an actor used ChatGPT as a coding assistant to iteratively build and debug Windows malware, which was then hidden inside a popular gaming tool.

4. Another Chinese group fueled U.S. political division. “Uncle Spam” generated polarizing content supporting both sides of divisive topics like tariffs. They also used AI image generators to create logos for fake personas, like a “Veterans for Justice” group critical of the current US administration.

5. A Filipino PR firm spammed social media for politicians. “Operation High Five” used AI to generate thousands of pro-government comments on Facebook and TikTok, even creating the nickname “Princess Fiona” to mock a political opponent.

Why this matters: It’s a glimpse into the future of cyber threats and information warfare. AI lowers the barrier to entry, allowing less-skilled actors to create more sophisticated malware and propaganda. A lone wolf can now operate with the efficiency of a small team. This type of information will also likely be used to discredit or outright ban local open-source AI if we’re not careful to defend them (for their positive uses).

Now get this: The very tool these actors use to scale their operations is also their biggest vulnerability. This report shows that monitoring how models are used is one of the most powerful tools we have to fight back. Every prompt, every code snippet they ask for help with, and every error they try to debug is a breadcrumb. They're essentially telling on themselves, giving researchers a real-time feed of their tactics. For now, the spies using AI are also being spied on by AI.

Friday, May 23, 2025

AI Can't Protect It's IP Alone - It Needs TSCM

Altman himself was paranoid about people leaking information. He privately worried about Neuralink staff, with whom OpenAI continued to share an office, now with more unease after Elon Musk’s departure. Altman worried, too, about Musk, who wielded an extensive security apparatus including personal drivers and bodyguards.

Thursday, March 20, 2025

AI is Watching You Drive, And it Knows More Than You Think

- AI traffic cameras are becoming widespread, detecting violations like texting or not wearing seat belts.

- Location determines enforcement methods, with some countries automating citations while others involve human officers.

- AI cameras can improve road safety by catching distracted drivers, but data security, accuracy, and bias concerns remain.

As you drive past, the camera snaps a high-resolution photo of your car. These images capture the license plate, front seats, and “driver behavior.” Then, AI software analyzes the image to detect violations, like if you’re holding a phone or riding without a seat belt.

- Acusensus heads-up system snapshot of a passenger not wearing a seatbelt.

If they decide you are breaking the law, you get a ticket. If not, the image is deleted. more

Wednesday, February 19, 2025

A Spymaster Sheikh Controls a $1.5 Trillion Fortune. He Wants to Use It to Dominate AI

But in recent years, a new quest has taken up much of Sheikh Tahnoun’s attention. His onetime chess and technology obsession has morphed into something far bigger: a hundred-billion-dollar campaign to turn Abu Dhabi into an AI superpower. And the teammate he’s set out to buy this time is the United States tech industry itself. more

Thursday, November 14, 2024

AI CCTV - Creating a Surveillance Society

Aptly named ‘Dejaview,’ ETRI’s high-tech platform blends AI with real-time CCTV to predict crimes before they transpire. But whereas the Pre-Crime department Tom Cruise heads in Minority Report focused on criminal intention, Dejaview is instead concerned with probability.

ETRI says the platform can discern patterns and anomalies in real-time scenarios, allowing it to predict incidents from petty offences to drug trafficking with a sci-fi-esque 82% accuracy rate. more

Friday, October 11, 2024

Amazing AI - Imagine Alternate Espionage Uses

Want to see hear what the future sounds like? Check out these 10 examples: |

|

Monday, October 7, 2024

Harvard Hackers Turned Meta's Smart Glasses into Creepy Stalker Specs

A few weeks ago, Meta announced the ability to use its new Ray-Ban Meta glasses to get information about your surroundings. Innocent things, like identifying flowers.

Well, two Harvard students just revealed how easy it is to turn these new smart glasses into a privacy nightmare.

Here’s what happened: students Anhphu Nguyen and Caine Ardayfio cooked up an app called I-XRAY that turns these Ray-Bans into a doxxing machine. We're talking name, address, phone number—all from looking at someone with the glasses.

Here's how it works:

The Ray-Bans can record up to three minutes of video, with a privacy light that's about as noticeable as a firefly in broad daylight.

This video is streamed to Instagram, where an AI monitors the feed.

I-XRAY uses PimEyes (a facial recognition tool) to match these faces to public images, then unleashes AI to dig up personal details from public databases.

Their demo had strangers freaking out when they realized how easily identifiable they were from public online info.

How to Remove Your Information

Fortunately, it is possible to erase yourself from data sources like Pimeyes and FastPeopleSearch, so this technology immediately becomes ineffective. We are outlining the steps below so that you and those you care about can protect themselves.

Removal from Reverse Face Search Engines:

The major, most accurate reverse face search engines, Pimeyes and Facecheck.id, offer free services to remove yourself.

Removal from People Search Engines

Most people don’t realize that from just a name, one can often identify the person’s home address, phone number, and relatives’ names. We collected the opt out links to major people search engines below:

Preventing Identity Theft from SSN data dump leaks

Most of the damage that can be done with an SSN are financial. The main way to protect yourself is adding 2FA to important logins and freezing your credit below:

Extensive list of data broker removal services

Wednesday, August 14, 2024

FutureWatch: The AI Polygraph, or Who's Zoomin' You

How it Works

PolygrAI is a fusion of advanced computer vision algorithms and extensive psychological research designed to discern the validity of human expressions. The software meticulously analyzes a spectrum of physiological and behavioral indicators correlated with deceit. For instance, when a person tells a lie, they might unconsciously exhibit decreased blinking or an erratic gaze—these are the tell-tale signs that PolygrAI detects.

The system vigilantly computes a ‘trustfulness score’ by monitoring and interpreting subtle changes in facial expressions, heart rate variability, and eye movement patterns. This score is adjusted in real-time, offering a dynamic gauge of credibility.

Furthermore, PolygrAI assesses the voice for sudden shifts in tone and pitch—parameters that could betray an individual’s composure or reveal underlying stress. more Lifetime access ($100) for beta testers.

|

| Click to enlarge. |